Ollama on FreeBSD using GPU passthrough

LLMs and “AI” are a hot topic, and despite the hype, there are practical applications that convinced me to invest my time and money to set up a local LLM. This article isn’t about my use cases, but about the process of getting Ollama up and running with GPU acceleration on FreeBSD using bhyve. This is a relatively advanced setup, requiring familiarity with FreeBSD, virtualization, and NVIDIA drivers.

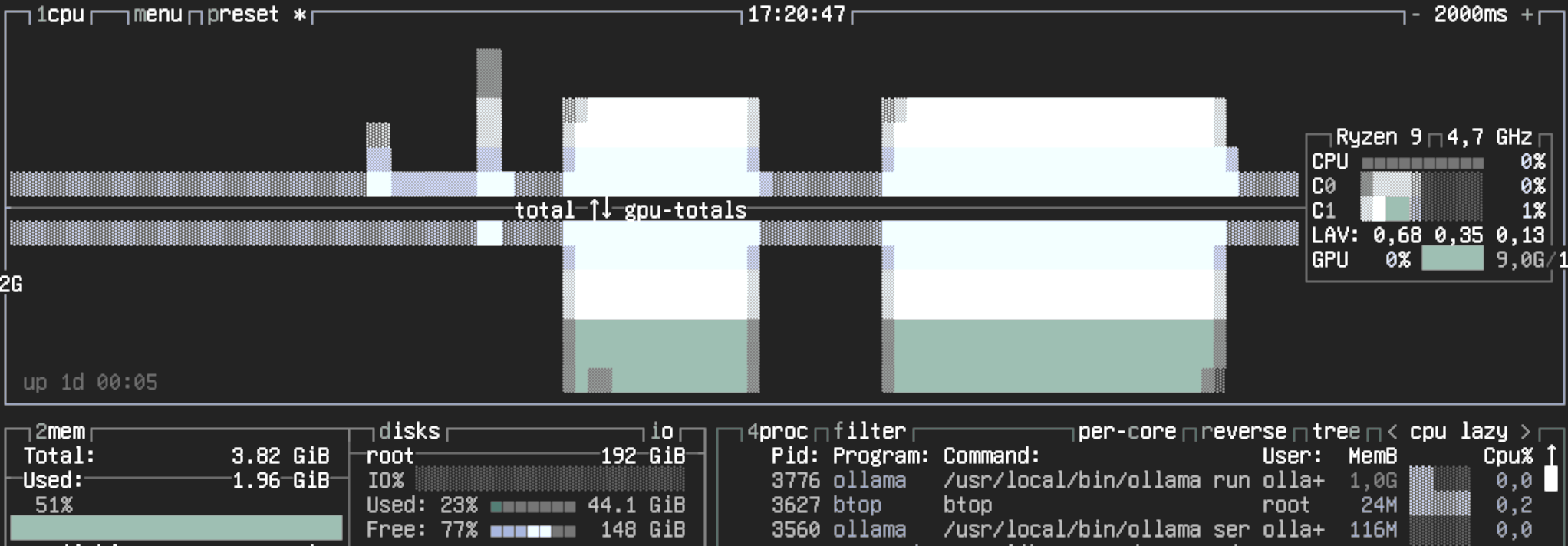

Initially, I explored running Ollama within a FreeBSD jail, but it only utilized CPU resources, quickly consuming all available cores. To leverage my GeForce RTX 3060’s 12GB of RAM and GPU acceleration, I shifted to a Bhyve VM setup. I prefer running my VMs with UEFI boot, as this makes migration to different hardware or hypervisors much easier. Let’s get started:

2025-08-15